In today’s digital workplace each task, process, and activity leaves a small trace and signal to different applications and IT systems used. These signals are called event logs – and form the foundation of the data science behind process mining software.

In this article, we dive into what event logs are, and what are their benefits and limitations in process mining. Each event refers to a case, an activity, and a timestamp, highlighting the importance of event logs as comprehensive collections that reflect the sequences of events within a given process.

What exactly are event logs?

An event log is a structured file containing records and timings of hardware and software events and activities within a computer database. They are typically used to track changes to the database, such as changes to the data structure, data entry records, and even user logins.

Event logging provides a standard and system-specific way for software applications and operating systems to record important events or changes. Event logs can originate from the operating system itself and are common in many IT systems, including Windows computers, Customer Relationship Management systems (CRMs), and many Enterprise Resource Planning systems (ERPs.)

How are event logs used in process mining tools?

Event logs form the foundation of process mining. They can be used to record several linked business processes as well as one or more variations of a particular business process in a team or business unit.

Process mining assumes the existence of a structured event log detailing distinct events linked to specific cases, activities, and timestamps.

You can consider event logs as digital footprints in business operations. Each event record tells us something about the tasks, processes, and work executed in a team or business unit being analyzed.

Examples of event logs found in enterprise resource planning (ERP) systems

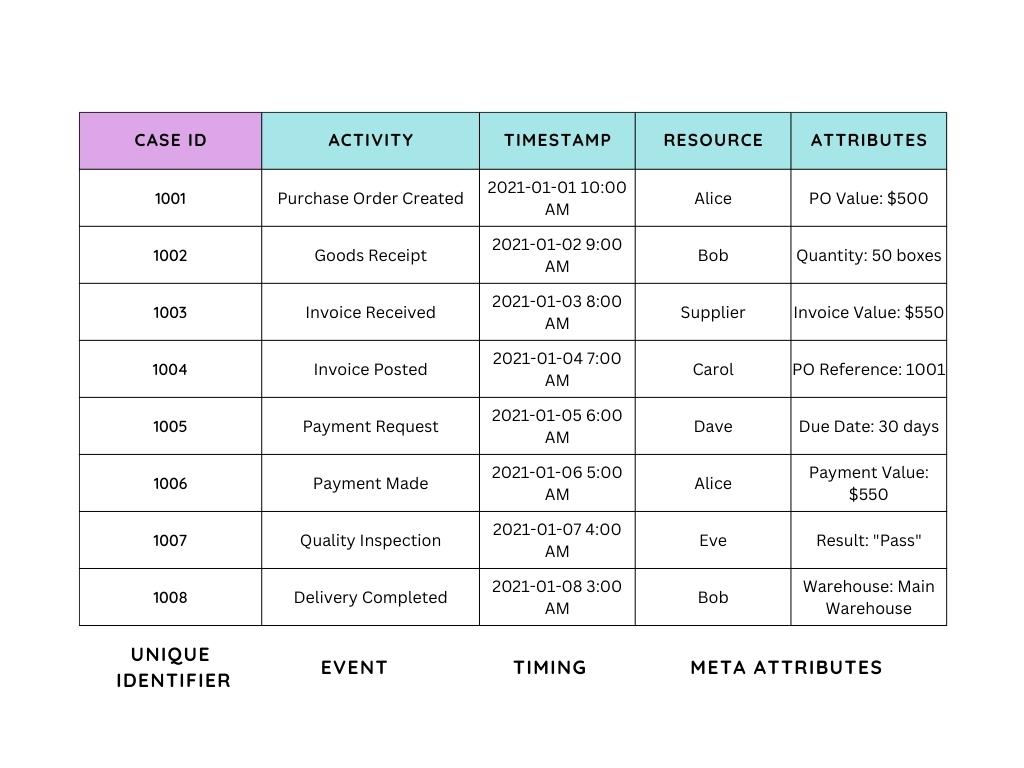

Individual events are listed in the event log along with standard properties:

- Case – a case can be seen as a trace or sequence of events in a process filed in event logs.

- CaseID – a CaseID is a unique identifier for any business object or transaction that is tracked in event logs.

- Activity – a task or action taken within a business process, for example, “approve,”“reject” or “request.”

- Timestamps – a timestamp indicates precisely when each of the activities took place.

- (Meta) attributes – there can be additional attributes stored in event logs, for example, the process category or product type being processed, or information on which person or department made changes to the event log.

How event logs are used in process mining

Event logs are the core data model and source of data used in process mining. They are the raw transaction data that is gathered and harmonized from different systems for process analysis.

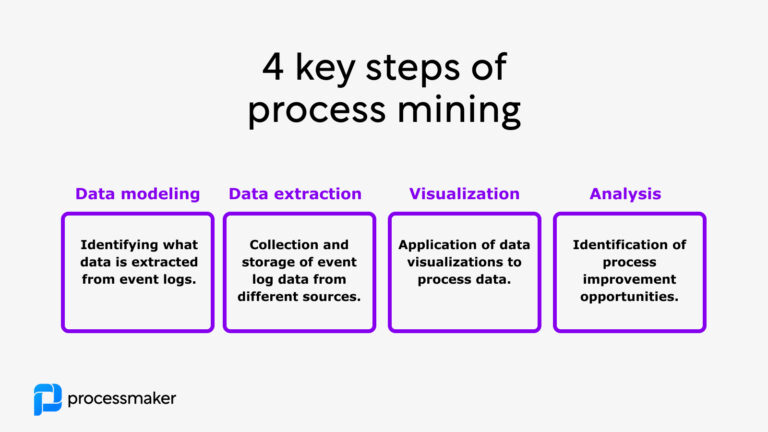

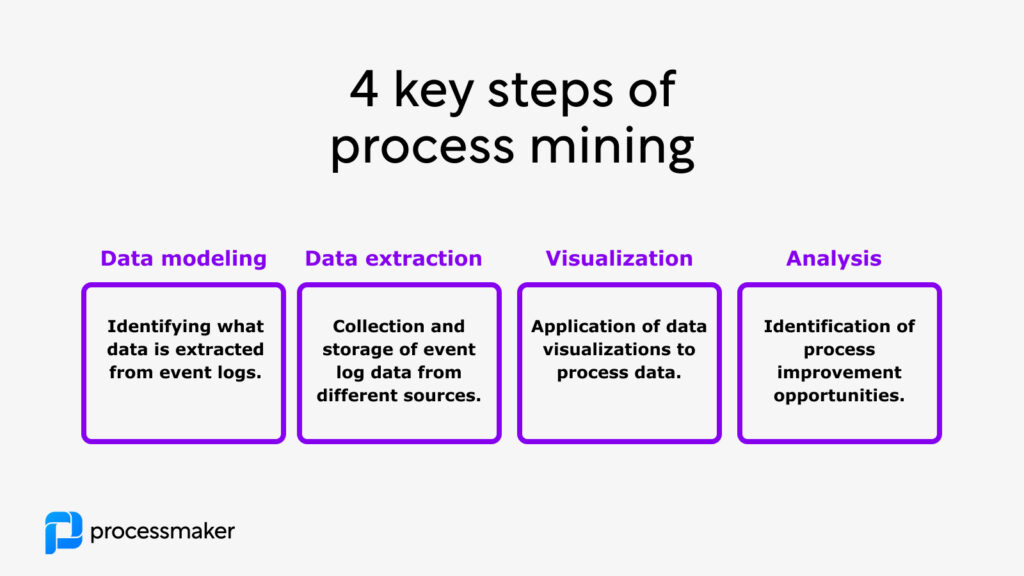

How does event log data turn into process analytics? You can imagine the process happening in four key steps:

- data modeling – identify what source systems and raw data will be used in data analytics. This step can include assessing what (meta) attributes can be collected and utilized in event logs from different source systems.

- data extraction – in this step data is extracted, transferred, and loaded (ETL) from different source systems to a core database. The core goal is to form a consistent and clean data set for process analysis.

- data visualization – once process data has been collected and harmonized from event logs they can be visualized using process mining visualization algorithms, such as process variations graphs.

- data analysis – the final and key step to process mining is the analysis of the process data with the aim of finding opportunities to eliminate, standardize, or automate inefficient processes.

Saying process mining consists of 4 steps is oversimplification. In reality, there are many additional steps required depending on where the event log data is gathered or how much detail the data contains. Typically you will need to integrate and extract data from each source system separately and each data source may have its unique requirements for event data extraction.

Event logs are the starting point of many process mining software solutions.

Towards standardization in event logging data

While event logs can be in different formats in systems and databases, a standard has emerged for how event data is analyzed and visualized in process mining tools. eXtensible Event Stream (XES) is an open standard for representing event data for process mining. It is an XML-based format for representing event logs, which are collections of events that occur within a system or process.

XES provides a unified framework for the encoding and exchange of event data, allowing different systems to interoperate and share event logs. XES also allows for the creation of custom extensions and annotations, allowing organizations to add more detailed information to their event logs.

While many process mining tools use XES process mining data can also be stored in different formats, including Comma-separated values (CSV) files.

Limitations of event logs in process analysis

Although event log based process mining can do wonders for organizations and has been proving that for many years, there are still areas where process mining falls short.

Analysis of the latest but not real-time data

Process mining examines the most recent data taken from information systems, but this does not necessarily provide a complete picture of how a company is doing right now. Offline analytics that are more “static” are produced by first extracting the data from event logs at a specific moment in time, cleaning it, and only then analyzing it. As a result, standard process mining technologies are unable to continuously alert about potential process deviations

High initial costs

The deployment of process mining tools requires a great deal of work and input from numerous teams and units, which results in a high total cost of ownership. It’s not uncommon for a process mining team to include dedicated data scientists and process mining experts requiring deep domain knowledge.

Heavy reliance on human analysts

Although the ultimate goal of process mining is to enable process improvement, this goal cannot be achieved without the assistance of business analysts, data analysts, and IT professionals. For process mining, there are two major areas where people are absolutely necessary:

- Data interpretation: once the data has been analyzed, insights alone are insufficient; a business analyst is required to interpret the data and identify specific use cases that are consistent with the original goals.

- Data extraction and cleansing: Since event log data may be missing, inaccurate, or duplicated, data analysts must spend time cleaning the data before it can be used.

Long time-to-value

Different systems take a lot of data integration and preparation time before they can even start providing event logs. Because it’s a necessary step in process mining, it ends up lengthening the time-to-value of the projects.

What if you can’t use event log data?

While event log data is readily available in many source systems, such as the commonly used SAP ERP system – there are many business applications that don’t contain the required data to create event logs for process mining.

One useful tool for checking and viewing Windows event logs is the Event Viewer, which is crucial for tracking system issues and locating the files in the specified directory.

Today’s business operations utilize a broad set of applications that don’t contain readily available event logs

- Productivity/teamwork apps (Outlook, Teams, etc.),

- Legacy information systems (LIS),

- 3rd party/vendor systems,

- Proprietary software or reporting,

- Government portals.

The lack of event log data is especially important in knowledge-heavy sectors such as insurance, financial services, and accounting. In these examples, there are many manual and time-consuming processes and workflows that happen between different applications to form a unified process.

Imagine, for example, an insurance claim or a loan application. In these cases, there are many activities that don’t get tracked in the event logs of the ERP or CRM system as case experts review or process specific information from different data sources.

Consider an alternative: hybrid process intelligence

Process mining can be a great source for process intelligence – as long as you have access to event logs and you have high-quality, structured data. If you don’t have ready access to event logs or your workflows span across many different applications you may want to consider hybrid.

Windows event logs store crucial information about system, security, and application-related events. These logs include severity levels and detailed definitions, which are essential for monitoring and diagnosing issues, as well as predicting future problems within a Windows operating system.

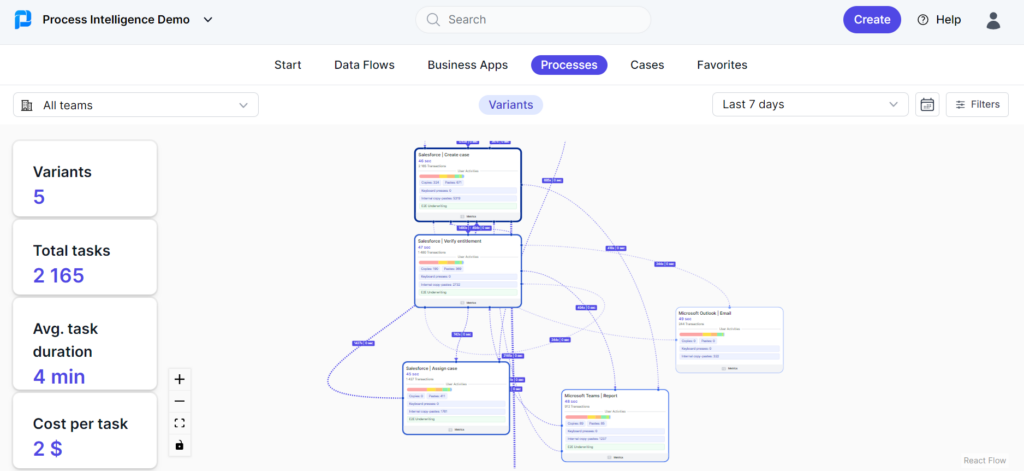

Example of hybrid process intelligence – ProcessMaker Process Intelligence

ProcessMaker PI creates standardized event logs based on business objects in the UI

With a hybrid approach, you are not limited to analyzing data with event logs – in fact, solutions like ProcessMaker Process Intelligence generate event logs automatically from business object data in relevant application interfaces. The end result is the well-known benefits of process mining without the data integration and event log wrangling hassle. For more information, see our latest whitepaper.